Introduction

In Google Kubernetes Engine (GKE), the Ingress feature serves to expose services outside the cluster, establishing a layer 7 load balancer. However, traditional Ingress implementations pose certain limitations. Specifically, they mandate that Ingress and its associated services reside in the same namespace. Additionally, all routes and rules are confined within a single YAML file, often leading to complexity when managing multiple rules and annotations. This complexity further escalates when multiple teams share the Ingress resource, risking route disruptions caused by changes made by individual teams.

The GKE Gateway addresses these challenges effectively. It enables cross-namespace routing, allowing the Gateway to exist in its dedicated namespace—a manageable space for platform teams. Application teams can independently manage their routes and share the Gateway. The GKE Gateway is Google Kubernetes Engine’s implementation of the Kubernetes Gateway API for Cloud Load Balancing. The Kubernetes Gateway API, overseen by SIG Network, is an open-source project comprising diverse resources that model service networking within Kubernetes.

There are two versions of GKE Gateway controller:

- Single cluster – manages single-cluster Gateways for a single GKE cluster.

- Multi-cluster – manages multi-cluster Gateways for one or more GKE clusters in different regions.

I will cover the multi-cluster Gateway setup as part of this blog.

What is GKE Gateway?

The GKE Gateway serves as a resource defining an access point. Each GKE Gateway is linked to a Gateway Class, specifying the type of Gateway Controller. Within GKE, various gateway classes facilitate provisioning under GCP load balancers:

- Global External L7 Load Balancer

- Regional External L7 Load Balancer

- Regional Internal L7 Load Balancer

- Global External L7 Load Balancer for multiple clusters

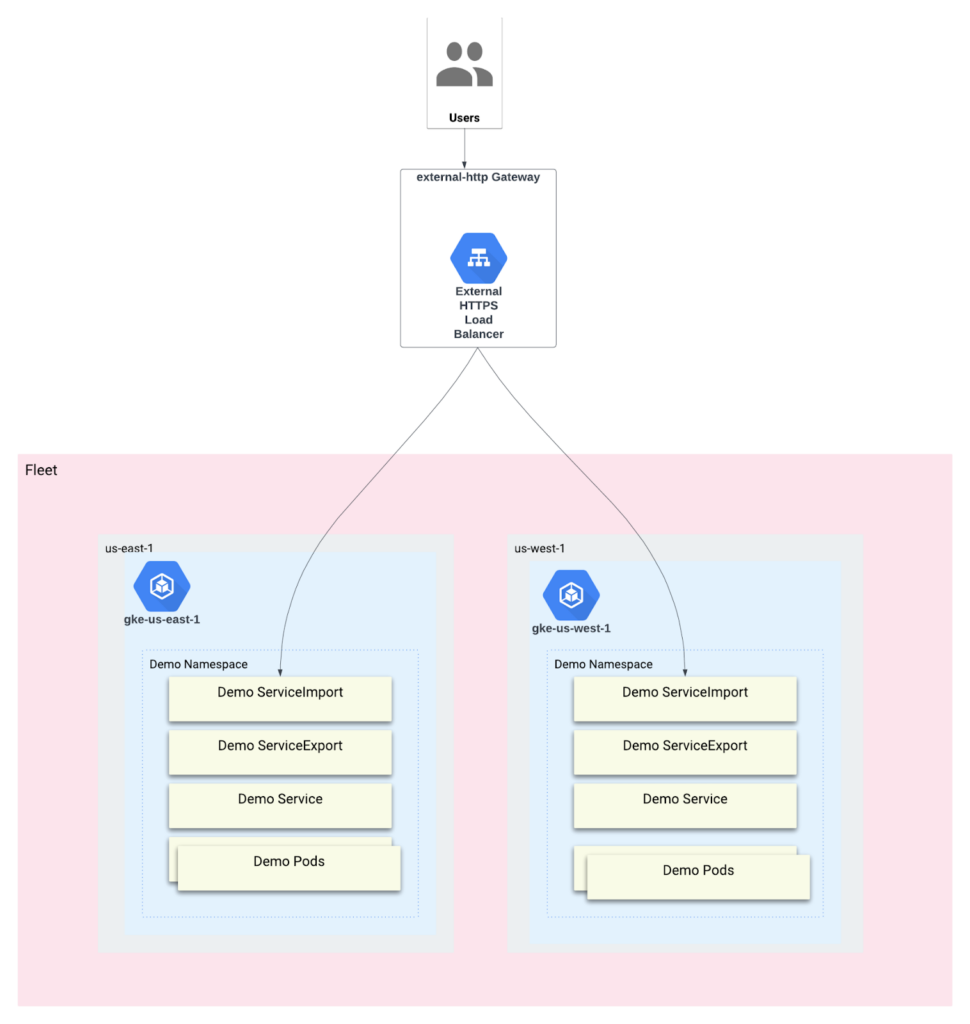

This article focuses on setting up Global External L7 Load Balancer for multiple clusters using GKE Gateway. In this example, we’ll set up an external, multi-cluster Gateway to perform load balancing across two GKE clusters, specifically for internet traffic.

Setting up the environment

- Deploy two GKE clusters, ‘gke-us-east-1’ in the ‘us-east-1’ region and ‘gke-us-west-1’ in the ‘us-west-1’ region. Register both clusters to the project’s fleet and ensure that workload identity is enabled for each.

- Enable multi-cluster services in the project’s fleet.

gcloud container fleet muti-cluster-services enable — project=<project_name>- Enable the multi-cluster Gateway controller on the config cluster. The config cluster is the GKE cluster in which the Gateway and Route resources are deployed. It is a central place that controls routing across the clusters. In this setup, ‘gke-us-east-1’ is considered a config cluster.

gcloud container fleet ingress enable --config-membership=gke-us-east-1 --project=<project_name> - Give the network viewer role required for multi-cluster-service.

resource "google_project_iam_member" "network_viewer_role_mcs" {

project = "<project-name>"

role = "roles/compute.networkViewer"

member = "serviceAccount:<project-name>.svc.id.goog[gke-mcs/gke-mcs-importer]"

}- Grant the Container Admin role to the GCP Multicluster Ingress service account.

resource "google_project_iam_member" "container_admin_role_mcs" {

project = "<project-name>"

role = "roles/container.admin"

member = "serviceAccount:service-<project-number>@gcp-sa-multiclusteringress.iam.gserviceaccount.com"

}Setting up the demo application

Deploy the demo application in both GKE clusters, ‘gke-us-east-1’ and ‘gke-us-west-1’, using the provided manifests.

kind: Namespace

apiVersion: v1

metadata:

name: demo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

namespace: demo

spec:

replicas: 2

selector:

matchLabels:

app: demo

version: v1

template:

metadata:

labels:

app: demo

version: v1

spec:

containers:

- name: whereami

image: us-docker.pkg.dev/google-samples/containers/gke/whereami:v1.2.20

ports:

- containerPort: 8080Configuring services

With the demo application now running across both clusters, we’ll expose and export the applications by deploying Services and ServiceExports to each cluster. ServiceExport is a custom resource which is mapped to a Kubernetes Service. It exports the endpoints of that Service to all clusters registered to the fleet.

Another custom resource, ServiceImport, is automatically generated by the multi-cluster Service controller for each ServiceExport resource. Multi-cluster Gateways utilize ServiceImports as logical identifiers for a Service.

Deploy below Service and ServiceExport resources in ‘gke-us-east-1’.

apiVersion: v1

kind: Service

metadata:

name: demo

namespace: demo

spec:

selector:

app: demo

ports:

- port: 8080

targetPort: 8080

---

kind: ServiceExport

apiVersion: net.gke.io/v1

metadata:

name: demo

namespace: demo

---

apiVersion: v1

kind: Service

metadata:

name: demo-east-1

namespace: demo

spec:

selector:

app: demo

ports:

- port: 8080

targetPort: 8080

---

kind: ServiceExport

apiVersion: net.gke.io/v1

metadata:

name: demo-east-1

namespace: demoDeploy below Service and ServiceExport resources in ‘gke-us-west -1’.

apiVersion: v1

kind: Service

metadata:

name: demo

namespace: demo

spec:

selector:

app: demo

ports:

- port: 8080

targetPort: 8080

---

kind: ServiceExport

apiVersion: net.gke.io/v1

metadata:

name: demo

namespace: demo

---

apiVersion: v1

kind: Service

metadata:

name: demo-west-1

namespace: demo

spec:

selector:

app: demo

ports:

- port: 8080

targetPort: 8080

---

kind: ServiceExport

apiVersion: net.gke.io/v1

metadata:

name: demo-west-1

namespace: demoAfter deploying Services and ServiceExports, verify that ServiceImports are created for each cluster.

kubectl get serviceimports —-namespace demoThe expected output should resemble to

# gke-us-west-1

NAME AGE

demo 1m30s

demo-west-1 1m30s

# gke-us-east-1

NAME AGE

demo 1m30s

demo-east-1 1m30s

Configuring Gateways and HTTPRoute

Once the application and services are deployed, we can configure Gateway and HTTPRoute in the config cluster. To distribute traffic across both clusters, we’ll utilize the Gateway Class ‘gke-l7-global-external-managed-mc’ for creating an External Load Balancer.

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: external-http

namespace: demo

spec:

gatewayClassName: gke-l7-global-external-managed-mc

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

kinds:

- kind: HTTPRouteDeploying the Gateway will initiate the creation of a Load Balancer, an external IP address, a forwarding rule, two backend services, and a health check.

Deploy the following HTTPRoute resource in the config cluster (gke-us-east-1).

kind: HTTPRoute

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: public-demo-route

namespace: demo

labels:

gateway: external-http

spec:

hostnames:

- "demo.example.com"

parentRefs:

- name: external-http

rules:

- matches:

- path:

type: PathPrefix

value: /west

backendRefs:

- group: net.gke.io

kind: ServiceImport

name: demo-west-1

port: 8080

- matches:

- path:

type: PathPrefix

value: /east

backendRefs:

- group: net.gke.io

kind: ServiceImport

name: demo-east-1

port: 8080

- backendRefs:

- group: net.gke.io

kind: ServiceImport

name: demo

port: 8080When the HTTPRoute is deployed, requests to /west are directed to pods running in the ‘gke-us-west-1’ cluster, requests to /east are directed to pods running in the ‘gke-us-east-1’ cluster, and any other paths are routed to pods in either cluster based on their health, capacity, and proximity to the requesting client.

If the traffic is sent to the root path of the domain the load balancer will send traffic to the closest region.

curl -H “host:demo.example.com” http://<EXTRENAL_IP_ADDRESS__OF_GATEWAY>

The sample output shows that the request was served from the pod running in gke-us-west-1 cluster.

{

“cluster_name” : “gke-us-west-1”,

“zone” : “us-west1-a”,

“host_header” : “demo.example.com”,

“node_name” : “gke-gke-us-west-1-default-pool-2367899-5y53.c.<project-id>.internal”,

“project_id” : “<project-id>”,

“timestamp” : “2024-01-30T14:23:14”,

}If the traffic is sent to /east path then the request is served from the pod running in gke-us-east-1 cluster.

curl -H “host:demo.example.com” http://<EXTRENAL_IP_ADDRESS_OF_GATEWAY>/east

The sample output shows that the request was served from the pod running in gke-us-east-1 cluster.

{

“cluster_name” : “gke-us-east-1”,

“zone” : “us-east1-b”,

“host_header” : “demo.example.com”,

“node_name” : “gke-gke-us-east-1-default-pool-678899-5p93.c.<project-id>.internal”,

“project_id” : “<project-id>”,

“timestamp” : “2024-01-30T14:25:14”,

}If the traffic is sent to /west path then the request is served from the pod running in gke-us-west-1 cluster.

curl -H “host:demo.example.com” http://<EXTRENAL_IP_ADDRESS_OF_GATEWAY>/west

The sample output shows that the request was served from the pod running in gke-us-west-1 cluster.

{

“cluster_name” : “gke-us-west-1”,

“zone” : “us-west1-a”,

“host_header” : “demo.example.com”,

“node_name” : “gke-gke-us-west-1-default-pool-2367899-5y53.c.<project-id>.internal”,

“project_id” : “<project-id>”,

“timestamp” : “2024-01-30T14:26:14”,

}Securing the Gateway

If you’re deploying the Gateway in a production environment, make sure that the Gateway can be secured by applying Gateway policies.

- For the domain ‘demo.example.com,’ create an SSLCertificate resource and include it in the Gateway configuration as outlined below:

kind: Gateway

apiVersion: gateway.networking.k8s.io/v1beta1

metadata:

name: external-http

namespace: demo

spec:

gatewayClassName: gke-l7-global-external-managed-mc

listeners:

- name: https

protocol: HTTPS

port: 443

tls:

mode: Terminate

options:

networking.gke.io/pre-shared-certs: demo-example-com

allowedRoutes:

kinds:

- kind: HTTPRoute- Google Cloud Armor security policies protect the application from web-based attacks. These policies, composed of rules filtering traffic based on conditions like incoming request parameters such as IP address, IP range, region code, or request headers, provide enhanced protection. Once implemented, GCPBackendPolicy can be applied to Kubernetes Services.

apiVersion: networking.gke.io/v1

kind: GCPBackendPolicy

metadata:

name: demo

namespace: demo

spec:

default:

securityPolicy: cloud-armor-policy

targetRef:

group: ""

kind: ServiceImport

name: demo

namespace: demoConclusion

The GKE Gateway represents a powerful evolution beyond traditional Ingress. Its role-oriented design surpasses the constraints of conventional Ingress, offering versatile application across namespaces. This article aimed to provide a clear understanding of configuring the GKE gateway for multiple clusters.

References

- https://cloud.google.com/kubernetes-engine/docs/how-to/deploying-multi-cluster-gateways#deploy-gateway

- https://gateway-api.sigs.k8s.io/

- https://cloud.google.com/kubernetes-engine/docs/how-to/secure-gateway

About Vinita Joshi

Vinita, a Senior Cloud Infrastructure Engineer, thrives on crafting solutions on the Google Cloud Platform, boasting extensive expertise in Kubernetes.

About SADA, An Insight company

SADA is a professional services market leader and solutions provider of Google Cloud. Since 2000, SADA has helped organizations of every size in healthcare, media, entertainment, retail, manufacturing, and the public sector solve their most complex digital transformation challenges. With offices in North America, India, the UK, and Armenia providing sales, customer support, and professional services, SADA has become Google’s leading partner for generative AI solutions. SADA’s expertise also includes Infrastructure Modernization, Cloud Security, and Data Analytics. A 6x Google Cloud Partner of the Year award winner with 10 Google Cloud Specializations, SADA was recognized as a Niche Player in the 2023 Gartner® Magic Quadrant™ for Public Cloud IT Transformation Services. SADA is a 15x honoree of the Inc. 5000 list of America’s Fastest-Growing Private Companies and has been named to Inc. Magazine’s Best Workplaces four years in a row. Learn more at www.sada.com.

If you’re interested in becoming a part of the SADA team, please visit our careers page.